Nicegui widgets/Tutorial: Difference between revisions

| Line 305: | Line 305: | ||

</source> | </source> | ||

== Adding a RESTFul service == | == Adding a RESTFul service == | ||

=== eventseries_api === | |||

{{Ticket | |||

|number=59 | |||

|title=refactor eventseries RESTFul service to nicegui/FastAPI | |||

|project=ConferenceCorpus | |||

|createdAt=2023-11-19 05:21:07+00:00 | |||

|closedAt= | |||

|state=open | |||

}} | |||

==== Implementation ==== | |||

Note how we separate the concerns and have function to get the eventSeries as a dict of list of dicts and then a conversion | |||

to the different formats that is going to be used by the Webserver to provide the results in a RESTFul way. | |||

<source lang='python'> | |||

import io | |||

import re | |||

import pandas as pd | |||

from dataclasses import dataclass, asdict | |||

from fastapi import Response | |||

from fastapi.responses import JSONResponse,FileResponse | |||

from tabulate import tabulate | |||

from typing import List | |||

from spreadsheet.spreadsheet import ExcelDocument | |||

from corpus.lookup import CorpusLookup | |||

from corpus.datasources.openresearch import OREvent, OREventSeries | |||

from corpus.eventseriescompletion import EventSeriesCompletion | |||

class EventSeriesAPI(): | |||

""" | |||

API service for event series data | |||

""" | |||

def __init__(self,lookup:CorpusLookup): | |||

''' | |||

construct me | |||

Args: | |||

lookup | |||

''' | |||

self.lookup=lookup | |||

def getEventSeries(self,name: str,bks:str=None,reduce:bool=False): | |||

''' | |||

Query multiple datasources for the given event series | |||

Args: | |||

name(str): the name of the event series to be queried | |||

''' | |||

multiQuery = "select * from {event}" | |||

idQuery = f"""select source,eventId from event where lookupAcronym LIKE "{name} %" order by year desc""" | |||

dictOfLod = self.lookup.getDictOfLod4MultiQuery(multiQuery, idQuery) | |||

if bks: | |||

allowedBks = bks.split(",") if bks else None | |||

self.filterForBk(dictOfLod.get("tibkat"), allowedBks) | |||

if reduce: | |||

for source in ["tibkat", "dblp"]: | |||

sourceRecords = dictOfLod.get(source) | |||

if sourceRecords: | |||

reducedRecords = EventSeriesCompletion.filterDuplicatesByTitle(sourceRecords) | |||

dictOfLod[source] = reducedRecords | |||

return dictOfLod | |||

def filterForBk(self,lod:List[dict], allowedBks:List[str]): | |||

""" | |||

Filters the given dict to only include the records with their bk in the given list of allowed bks | |||

Args: | |||

lod: list of records to filter | |||

allowedBks: list of allowed bks | |||

""" | |||

if lod is None or allowedBks is None: | |||

return | |||

mainclassBk = set() | |||

subclassBk = set() | |||

allowNullValue = False | |||

for bk in allowedBks: | |||

if bk.isnumeric(): | |||

mainclassBk.add(bk) | |||

elif re.fullmatch(r"\d{1,2}\.\d{2}", bk): | |||

subclassBk.add(bk) | |||

elif bk.lower() == "null" or bk.lower() == "none": | |||

allowNullValue = True | |||

def filterBk(record:dict) -> bool: | |||

keepRecord: bool = False | |||

bks = record.get("bk") | |||

if bks is not None: | |||

bks = set(bks.split("⇹")) | |||

recordMainclasses = {bk.split(".")[0] for bk in bks} | |||

if mainclassBk.intersection(recordMainclasses) or subclassBk.intersection(bks): | |||

keepRecord = True | |||

elif bks is None and allowNullValue: | |||

keepRecord = True | |||

return keepRecord | |||

lod[:] = [record for record in lod if filterBk(record)] | |||

def generateSeriesSpreadsheet(self, name:str, dictOfLods: dict) -> ExcelDocument: | |||

""" | |||

Args: | |||

name(str): name of the series | |||

dictOfLods: records of the series from different sources | |||

Returns: | |||

ExcelDocument | |||

""" | |||

spreadsheet = ExcelDocument(name=name) | |||

# Add completed event sheet and add proceedings sheet | |||

eventHeader = [ | |||

"item", | |||

"label", | |||

"description", | |||

"Ordinal", | |||

"OrdinalStr", | |||

"Acronym", | |||

"Country", | |||

"City", | |||

"Title", | |||

"Series", | |||

"Year", | |||

"Start date", | |||

"End date", | |||

"Homepage", | |||

"dblp", | |||

"dblpId", | |||

"wikicfpId", | |||

"gndId"] | |||

proceedingsHeaders = ["item", "label", "ordinal", "ordinalStr", "description", "Title", "Acronym", | |||

"OpenLibraryId", "oclcId", "isbn13", "ppnId", "gndId", "dblpId", "doi", "Event", | |||

"publishedIn"] | |||

eventRecords = [] | |||

for lod in dictOfLods.values(): | |||

eventRecords.extend(lod) | |||

completedBlankEvent = EventSeriesCompletion.getCompletedBlankSeries(eventRecords) | |||

eventSheetRecords = [] | |||

proceedingsRecords = [] | |||

for year, ordinal in completedBlankEvent: | |||

eventSheetRecords.append({**{k: None for k in eventHeader}, "Ordinal": ordinal, "Year": year}) | |||

proceedingsRecords.append({**{k: None for k in proceedingsHeaders}, "ordinal": ordinal}) | |||

if not eventSheetRecords: | |||

eventSheetRecords = [{k: None for k in eventHeader}] | |||

proceedingsRecords = [{k: None for k in proceedingsHeaders}] | |||

spreadsheet.addTable("Event", eventSheetRecords) | |||

spreadsheet.addTable("Proceedings", proceedingsRecords) | |||

for lods in [dictOfLods, asdict(MetadataMappings())]: | |||

for sheetName, lod in lods.items(): | |||

if isinstance(lod, list): | |||

lod.sort(key=lambda record: 0 if record.get('year',0) is None else record.get('year',0)) | |||

spreadsheet.addTable(sheetName, lod) | |||

return spreadsheet | |||

async def convertToRequestedFormat(self, name: str, dictOfLods: dict, markup_format: str = "json"): | |||

""" | |||

Converts the given dicts of lods to the requested markup format. | |||

Supported formats: json, html, excel, pd_excel, various tabulate formats. | |||

Default format: json | |||

Args: | |||

dictOfLods: data to be converted | |||

Returns: | |||

Response | |||

""" | |||

if markup_format.lower() == "excel": | |||

# Custom Excel spreadsheet generation | |||

spreadsheet = self.generateSeriesSpreadsheet(name, dictOfLods) | |||

spreadsheet_io = io.BytesIO(spreadsheet.toBytesIO().getvalue()) # Ensure it's a BytesIO object | |||

spreadsheet_io.seek(0) | |||

return FileResponse(spreadsheet_io, media_type="application/vnd.ms-excel", filename=f"{name}.xlsx") | |||

elif markup_format.lower() == "pd_excel": | |||

# Pandas style Excel spreadsheet generation | |||

df = pd.DataFrame.from_dict({k: v for lod in dictOfLods.values() for k, v in lod.items()}) | |||

excel_io = io.BytesIO() | |||

with pd.ExcelWriter(excel_io, engine="xlsxwriter") as writer: | |||

df.to_excel(writer, sheet_name=name) | |||

excel_io.seek(0) | |||

return FileResponse(excel_io, media_type="application/vnd.ms-excel", filename=f"{name}.xlsx") | |||

elif markup_format.lower() == "json": | |||

# Direct JSON response | |||

return JSONResponse(content=dictOfLods) | |||

else: | |||

# Using tabulate for other formats (including HTML) | |||

tabulated_content = tabulate([lod for lod in dictOfLods.values()], headers="keys", tablefmt=markup_format) | |||

media_type = "text/plain" if markup_format.lower() != "html" else "text/html" | |||

return Response(content=tabulated_content, media_type=media_type) | |||

@dataclass | |||

class MetadataMappings: | |||

""" | |||

Spreadsheet metadata mappings | |||

""" | |||

WikidataMapping: list = None | |||

SmwMapping: list = None | |||

def __init__(self): | |||

self.WikidataMapping = [ | |||

{'Entity': 'Event series', 'Column':None, 'PropertyName': 'instanceof', 'PropertyId': 'P31', 'Value': 'Q47258130'}, | |||

{'Entity': 'Event series', 'Column': 'Acronym', 'PropertyName': 'short name', 'PropertyId': 'P1813', 'Type': 'text'}, | |||

{'Entity': 'Event series', 'Column': 'Title', 'PropertyName': 'title', 'PropertyId': 'P1476', 'Type': 'text'}, | |||

{'Entity': 'Event series', 'Column': 'Homepage', 'PropertyName': 'official website', 'PropertyId': 'P856', 'Type': 'url'}, | |||

{'Entity': 'Event', 'Column':None, 'PropertyName': 'instanceof', 'PropertyId': 'P31', 'Value': 'Q2020153'}, | |||

{'Entity': 'Event', 'Column': 'Series', 'PropertyName': 'part of the series', 'PropertyId': 'P179'}, | |||

{'Entity': 'Event', 'Column': 'Ordinal', 'PropertyName': 'series ordinal', 'PropertyId': 'P1545', 'Type': 'string', 'Qualifier': 'part of the series'}, | |||

{'Entity': 'Event', 'Column': 'Acronym', 'PropertyName': 'short name', 'PropertyId': 'P1813', 'Type': 'text'}, | |||

{'Entity': 'Event', 'Column': 'Title', 'PropertyName': 'title', 'PropertyId': 'P1476', 'Type': 'text'}, | |||

{'Entity': 'Event', 'Column': 'Country', 'PropertyName': 'country', 'PropertyId': 'P17', 'Lookup': 'Q3624078'}, | |||

{'Entity': 'Event', 'Column': 'City', 'PropertyName': 'location', 'PropertyId': 'P276', 'Lookup': 'Q515'}, | |||

{'Entity': 'Event', 'Column': 'Start date', 'PropertyName': 'start time', 'PropertyId': 'P580', 'Type': 'date'}, | |||

{'Entity': 'Event', 'Column': 'End date', 'PropertyName': 'end time', 'PropertyId': 'P582', 'Type': 'date'}, | |||

{'Entity': 'Event', 'Column': 'gndId', 'PropertyName': 'GND ID', 'PropertyId': 'P227', 'Type': 'extid'}, | |||

{'Entity': 'Event', 'Column': 'dblpUrl', 'PropertyName': 'describedAt', 'PropertyId': 'P973', 'Type': 'url'}, | |||

{'Entity': 'Event', 'Column': 'Homepage', 'PropertyName': 'official website', 'PropertyId': 'P856', 'Type': 'url'}, | |||

{'Entity': 'Event', 'Column': 'wikicfpId', 'PropertyName': 'WikiCFP event ID', 'PropertyId': 'P5124', 'Type': 'extid'}, | |||

{'Entity': 'Event', 'Column': 'dblpId', 'PropertyName': 'DBLP event ID', 'PropertyId': 'P10692', 'Type': 'extid'}, | |||

{'Entity': 'Proceedings', 'Column':None, 'PropertyName': 'instanceof', 'PropertyId': 'P31', 'Value': 'Q1143604'}, | |||

{'Entity': 'Proceedings', 'Column': 'Acronym', 'PropertyName': 'short name', 'PropertyId': 'P1813', 'Type': 'text'}, | |||

{'Entity': 'Proceedings', 'Column': 'Title', 'PropertyName': 'title', 'PropertyId': 'P1476', 'Type': 'text'}, | |||

{'Entity': 'Proceedings', 'Column': 'OpenLibraryId', 'PropertyName': 'Open Library ID', 'PropertyId': 'P648', 'Type': 'extid'}, | |||

{'Entity': 'Proceedings', 'Column': 'ppnId', 'PropertyName': 'K10plus PPN ID', 'PropertyId': 'P6721', 'Type': 'extid'}, | |||

{'Entity': 'Proceedings', 'Column': 'Event', 'PropertyName': 'is proceedings from', 'PropertyId': 'P4745', 'Lookup': 'Q2020153'}, | |||

{'Entity': 'Proceedings', 'Column': 'publishedIn', 'PropertyName': 'published in', 'PropertyId': 'P1433', 'Lookup': 'Q39725049'}, | |||

{'Entity': 'Proceedings', 'Column': 'oclcId', 'PropertyName': 'OCLC work ID','PropertyId': 'P5331', 'Type': 'extid'}, | |||

{'Entity': 'Proceedings', 'Column': 'isbn13', 'PropertyName': 'ISBN-13', 'PropertyId': 'P212', 'Type': 'extid'}, | |||

{'Entity': 'Proceedings', 'Column': 'doi', 'PropertyName': 'DOI', 'PropertyId': 'P356', 'Type': 'extid'}, | |||

{'Entity': 'Proceedings', 'Column': 'dblpId', 'PropertyName': 'DBLP event ID', 'PropertyId': 'P10692', 'Type': 'extid'}, | |||

] | |||

self.SmwMapping = [ | |||

*[{"Entity":"Event", | |||

"Column":r.get("templateParam"), | |||

"PropertyName":r.get("name"), | |||

"PropertyId":r.get("prop"), | |||

"TemplateParam": r.get("templateParam") | |||

} for r in OREvent.propertyLookupList | |||

], | |||

*[{"Entity": "Event series", | |||

"Column": r.get("templateParam"), | |||

"PropertyName": r.get("name"), | |||

"PropertyId": r.get("prop"), | |||

"TemplateParam": r.get("templateParam") | |||

} for r in OREventSeries.propertyLookupList | |||

] | |||

] | |||

</source> | |||

==== Test for event_series_api ==== | |||

<source lang='python'>import json | |||

from dataclasses import asdict | |||

from spreadsheet.googlesheet import GoogleSheet | |||

from corpus.web.eventseries import MetadataMappings, EventSeriesAPI | |||

from tests.datasourcetoolbox import DataSourceTest | |||

from corpus.lookup import CorpusLookup | |||

class TestEventSeriesAPI(DataSourceTest): | |||

""" | |||

tests EventSeriesBlueprint | |||

""" | |||

@classmethod | |||

def setUpClass(cls)->None: | |||

super(TestEventSeriesAPI, cls).setUpClass() | |||

cls.lookup=CorpusLookup() | |||

def setUp(self, debug=False, profile=True, timeLimitPerTest=10.0): | |||

DataSourceTest.setUp(self, debug=debug, profile=profile, timeLimitPerTest=timeLimitPerTest) | |||

self.lookup=TestEventSeriesAPI.lookup | |||

def testLookup(self): | |||

""" | |||

check the lookup | |||

""" | |||

self.assertTrue(self.lookup is not None) | |||

debug=self.debug | |||

datasource_count=len(self.lookup.eventCorpus.eventDataSources) | |||

if debug: | |||

print(f"found {datasource_count} datasources") | |||

self.assertTrue(datasource_count>3) | |||

def test_extractWikidataMapping(self): | |||

""" | |||

extracts wikidata metadata mapping from given google docs url | |||

""" | |||

url = "https://docs.google.com/spreadsheets/d/1-6llZSTVxNrYH4HJ0DotMjVu9cTHv2WnT_thfQ-3q14" | |||

gs = GoogleSheet(url) | |||

gs.open(["Wikidata"]) | |||

lod=gs.asListOfDicts("Wikidata") | |||

debug=self.debug | |||

#debug=True | |||

if debug: | |||

print(f"found {len(lod)} events") | |||

print(json.dumps(lod,indent=2)) | |||

self.assertEqual(23,len(lod)) | |||

pass | |||

#sheet = [{k:v for k,v in record.items() if (not k.startswith("Unnamed")) and v is not ''} for record in ] | |||

#print(sheet) | |||

def test_MetadataMapping(self): | |||

""" | |||

test the metadata mapping | |||

""" | |||

mapping = MetadataMappings() | |||

debug=self.debug | |||

#debug=True | |||

mapping_dict=asdict(mapping) | |||

if debug: | |||

print(json.dumps(mapping_dict,indent=2)) | |||

self.assertTrue("WikidataMapping" in mapping_dict) | |||

def testGetEventSeries(self): | |||

''' | |||

tests the multiquerying of event series over api | |||

some 17 secs for test | |||

''' | |||

es_api=EventSeriesAPI(self.lookup) | |||

dict_of_lods=es_api.getEventSeries(name="WEBIST") | |||

debug=self.debug | |||

#debug=True | |||

if debug: | |||

print(json.dumps(dict_of_lods,indent=2,default=str)) | |||

self.assertTrue("confref" in dict_of_lods) | |||

self.assertTrue(len(dict_of_lods["confref"]) > 15) | |||

def test_getEventSeriesBkFilter(self): | |||

""" | |||

tests getEventSeries bk filter | |||

see https://github.com/WolfgangFahl/ConferenceCorpus/issues/55 | |||

""" | |||

bks_list = ["85.20", "54.65,54.84", "54.84,85"] | |||

es_api=EventSeriesAPI(self.lookup) | |||

for bks in bks_list: | |||

res=es_api.getEventSeries(name="WEBIST", bks=bks) | |||

self.assertIn("tibkat", res) | |||

bksPerRecord = [record.get("bk") for record in res.get("tibkat")] | |||

expectedBks = set(bks.split(",")) | |||

for rawBks in bksPerRecord: | |||

self.assertIsNotNone(rawBks) | |||

bks = set(rawBks.split("⇹")) | |||

bks = bks.union({bk.split(".")[0] for bk in bks}) | |||

self.assertTrue(bks.intersection(expectedBks)) | |||

def test_filterForBk(self): | |||

""" | |||

tests filterForBk | |||

""" | |||

testMatrix = [ | |||

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["54"], 3), | |||

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["54","85"], 4), | |||

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["85.20"], 4), | |||

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["02"], 0), | |||

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["81.68","88.03"], 1), | |||

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["54"], 2), | |||

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["54","null"], 3), | |||

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["null"], 1), | |||

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["none"], 1), | |||

] | |||

es_api=EventSeriesAPI(self.lookup) | |||

for recordData, bkFilter, expectedNumberOfRecords in testMatrix: | |||

lod = [{"bk":bk} for bk in recordData] | |||

es_api.filterForBk(lod, bkFilter) | |||

self.assertEqual(len(lod), expectedNumberOfRecords, f"Tried to filter for {bkFilter} and expected {expectedNumberOfRecords} but filter left {len(lod)} in the list") | |||

def testTibkatReducingRecords(self): | |||

""" | |||

tests deduplication of the tibkat records if reduce parameter is set | |||

""" | |||

name="AAAI" | |||

expected={ | |||

False: 400, | |||

True: 50 | |||

} | |||

es_api=EventSeriesAPI(lookup=self.lookup) | |||

for reduce in (True,False): | |||

with self.subTest(msg=f"Testing with reduce={reduce}", testParam=reduce): | |||

res=es_api.getEventSeries(name=name, reduce=reduce) | |||

self.assertIn("tibkat", res) | |||

tibkat_count=len(res.get("tibkat")) | |||

should=expected[reduce]<=tibkat_count | |||

if reduce: | |||

should=not should | |||

self.assertTrue(should) | |||

</source> | |||

===== Test Result ===== | |||

<source lang='bash' highlight='1'> | |||

ConferenceCorpus % scripts/test --venv -tn test_eventseries_api | |||

No new changes in tests or corpus directories. | |||

Starting test testGetEventSeries ... with debug=False ... | |||

test testGetEventSeries ... with debug=False took 8.5 s | |||

.Starting test testLookup ... with debug=False ... | |||

test testLookup ... with debug=False took 0.0 s | |||

.Starting test testTibkatReducingRecords ... with debug=False ... | |||

test testTibkatReducingRecords ... with debug=False took 5.2 s | |||

.Starting test test_MetadataMapping ... with debug=False ... | |||

test test_MetadataMapping ... with debug=False took 0.0 s | |||

.Starting test test_extractWikidataMapping ... with debug=False ... | |||

test test_extractWikidataMapping ... with debug=False took 0.5 s | |||

.Starting test test_filterForBk ... with debug=False ... | |||

test test_filterForBk ... with debug=False took 0.0 s | |||

.Starting test test_getEventSeriesBkFilter ... with debug=False ... | |||

test test_getEventSeriesBkFilter ... with debug=False took 2.9 s | |||

. | |||

---------------------------------------------------------------------- | |||

Ran 7 tests in 17.202s | |||

OK | |||

</source> | |||

= Test first = | = Test first = | ||

Revision as of 07:19, 19 November 2023

This is a tutorial for the Nicegui widgets

Prerequisites

Before starting the tutorial, it's important to ensure the following prerequisites are met:

- Python Programming Knowledge: Familiarity with basic Python programming concepts. A good starting point is the Python Wikipedia page.

- Web Development Basics: Understanding of web development frameworks and concepts. More information can be found on the Web Development Wikipedia page.

- Terminal Access: Ability to use a command-line interface or terminal. See Command-line Interface on Wikipedia.

- Python Installation: Python 3.9 or higher should be installed on your system. See Python Downloads.

- GitHub and Version Control: Basic knowledge of using GitHub and version control systems. Learn more at GitHub Quickstart and the Version Control Wikipedia page.

- Pip for Python: Understanding of Python's package management system, pip. Refer to Pip Getting Started Guide and the Pip Wikipedia page for more information.

- Integrated Development Environment (IDE): While not mandatory, using an IDE can greatly enhance your coding experience. Recommended IDEs include:

- PyCharm: A Python-specific IDE known for its powerful features and ease of use. More details at PyCharm Wikipedia page.

- Visual Studio Code: A versatile and lightweight code editor with extensive plugin support. See the Visual Studio Code Wikipedia page.

- LiClipse: An easy-to-use IDE with support for multiple languages and frameworks. Information available on the LiClipse Wikipedia page.

These prerequisites are essential for effectively following the tutorial and understanding the implementation of the ConferenceCorpus project.

Windows

If you use Windows you might be out of luck for quite a few scripts and tools being mentioned here. For best results you might want to install an environment that is capable of handling these scripts and tools such as a Docker or the Windows Subsystem for Linux WSL. See e.g. Ubuntu in WSL for more details.

MacOS

![]() Life's good on MacOS. It's better using Homebrew and or MacPorts.

Life's good on MacOS. It's better using Homebrew and or MacPorts.

Linux

Example Usecase

This tutorial uses the ConferenceCorpus as it's usecase. As part of my research for the ConfIDent Project i am gathering metadata for scientific events from different datasources. There has been a lack of a proper frontend for the past few years although there have been several attempts to create a webserver and RESTFul services e.g. with

As of 2023-11 the project is now migrated to nicegui using the nicegui widgets (demo)

The github ticket for the migration is Issue 58 - refactor to use nicegui

Setting up the project

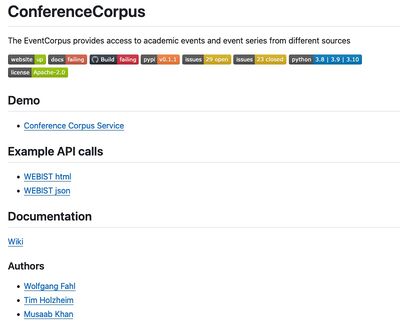

The project is on github see ConferenceCorpus

README

A GitHub README is a document that introduces and explains a project hosted on GitHub, providing essential information such as its purpose, how to install and use it, and its features. It is important because it serves as the first point of contact for anyone encountering the project, helping them understand what the project is about and how to engage with it.

The README consists of

- header

- badges

- link to demo

- example API calls

- links to documentation

- authors list

README screenshot as of start of migration

License

The LICENSE file in a software project specifies the legal terms under which the software can be used, modified, and shared, defining the rights and restrictions for users and developers. Using the Apache License is a good choice for many projects because it is a permissive open-source license that allows for broad freedom in use and distribution, while also providing legal protection against patent claims and requiring preservation of copyright notices.

pyproject.toml

pyproject.toml is a configuration file used in Python projects to define project metadata, dependencies,

and build system requirements, providing a consistent and standardized way to configure Python projects.

For more information, see pyproject.toml documentation.

Hatchling is a popular choice for a build system because it offers a modern, fast, and straightforward approach

to building and managing Python projects, aligning well with the standardized structure provided by

pyproject.toml. Learn more about Hatchling at Hatchling on GitHub.

This pyproject.toml file configures the Python project 'ConferenceCorpus', an API for accessing academic events and event series from different sources.

Build System

The [build-system] section defines the build requirements and backend. It specifies the use of 'hatchling' as the build backend:

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

Hatchling is a modern build backend for Python projects, aligning with PEP 517.

Project Metadata

Under the [project] section, several key details about the project are listed:

[project]

name = "ConferenceCorpus"

description = "python api providing access to academic events and event series from different sources"

home-page = "http://wiki.bitplan.com/index.php/ConferenceCorpus"

readme = "README.md"

license = {text = "Apache-2.0"}

authors = [{name = "Wolfgang Fahl", email = "wf@WolfgangFahl.com"}]

maintainers = [{name = "Wolfgang Fahl", email = "wf@WolfgangFahl.com"}]

requires-python = ">=3.9"

classifiers = ['Programming Language :: Python', ...]

dynamic = ["version"]

This section sets the project's name, description, homepage, README, license, authors, maintainers, required Python version, classifiers, and dynamic versioning.

Dependencies

The dependencies section lists the required external libraries for the project to function:

dependencies = [

"pylodstorage>=0.4.9",

...

]

These dependencies ensure that the necessary packages are installed for the project.

Project Scripts

The [project.scripts] section defines executable commands for various functionalities, with a focus on the `ccServer` script for starting the web server:

[project.scripts]

ccServer = "corpus.web.cc_cmd:main"

...

Understanding the ccServer Script

- Command:

ccServeris the command that users will run in the terminal. - Function: The value

"corpus.web.cc_cmd:main"specifies the Python function that gets executed when `ccServer` is invoked.- Package and Module: The first part,

corpus.web.cc_cmd, is the Python package and module where the function resides. - Function Name: The second part,

main, is the name of the function within that module.

- Package and Module: The first part,

Usage

- To start the webserver, a user would execute the following command in the terminal:

$ ccServer

- This command calls the `main` function from the `cc_cmd.py` file located in the `corpus.web` package, effectively initiating the web server for the ConferenceCorpus project.

Project URLs

The [project.urls] section provides links to the project's home, source, and documentation:

[project.urls]

Home = "http://wiki.bitplan.com/index.php/ConferenceCorpus"

Source = "https://github.com/WolfgangFahl/ConferenceCorpus"

Documentation = "https://github.com/WolfgangFahl/ConferenceCorpus/issues"

These URLs direct users to relevant resources for the project.

Command line

Thanks to niceguiwidget's WebserverCmd base class a command line startup python script only needs a few lines:

'''

Created on 2023-09-10

@author: wf

'''

import sys

from ngwidgets.cmd import WebserverCmd

from corpus.web.cc_webserver import ConferenceCorpusWebserver

class ConferenceCorpusCmd(WebserverCmd):

"""

command line handling for Conference Corpus Webserver

"""

def main(argv:list=None):

"""

main call

"""

cmd=ConferenceCorpusCmd(config=ConferenceCorpusWebserver.get_config(),webserver_cls=ConferenceCorpusWebserver)

exit_code=cmd.cmd_main(argv)

return exit_code

DEBUG = 0

if __name__ == "__main__":

if DEBUG:

sys.argv.append("-d")

sys.exit(main())

Usage

ccServer -h

usage: ccServer [-h] [-a] [-c] [-d] [--debugServer DEBUGSERVER]

[--debugPort DEBUGPORT] [--debugRemotePath DEBUGREMOTEPATH]

[--debugLocalPath DEBUGLOCALPATH] [-l] [-i INPUT] [-rol]

[--host HOST] [--port PORT] [-s] [-V]

Conference Corpus Volume browser

options:

-h, --help show this help message and exit

-a, --about show about info [default: False]

-c, --client start client [default: False]

-d, --debug show debug info [default: False]

--debugServer DEBUGSERVER

remote debug Server

--debugPort DEBUGPORT

remote debug Port

--debugRemotePath DEBUGREMOTEPATH

remote debug Server path mapping - remotePath - path

on debug server

--debugLocalPath DEBUGLOCALPATH

remote debug Server path mapping - localPath - path on

machine where python runs

-l, --local run with local file system access [default: False]

-i INPUT, --input INPUT

input file

-rol, --render_on_load

render on load [default: False]

--host HOST the host to serve / listen from [default: localhost]

--port PORT the port to serve from [default: 5005]

-s, --serve start webserver [default: False]

-V, --version show program's version number and exit

Installation

Install using pip

We use pip to install

pip install .

Install using scripts/install

There is also a bash script in scripts/install (which we'll later user in our github CI)

Webserver

Minimum Webserver code

Thanks to niceguiwidget's InputWebserver base class a the cc_webserver module only needs a few lines:

"""

Created on 2023-11-18

@author: wf

"""

from ngwidgets.input_webserver import InputWebserver

from ngwidgets.webserver import WebserverConfig

from corpus.version import Version

class ConferenceCorpusWebserver(InputWebserver):

"""

Webserver for the Conference Corpus

"""

@classmethod

def get_config(cls) -> WebserverConfig:

"""

get the configuration for this Webserver

"""

copy_right = "(c)2020-2023 Wolfgang Fahl"

config = WebserverConfig(

copy_right=copy_right, version=Version(), default_port=5005

)

return config

def __init__(self):

"""Constructor"""

InputWebserver.__init__(self, config=ConferenceCorpusWebserver.get_config())

Starting the webserver from the commandline

ccServer -s -c

will start your webserver and open a browser to access it. The default port is 5005 and therefore the link http://localhost:5005 should allow you test the results.

ccServer -s --host 0.0.0.0 port=80

Will make the server the default webserver on your intranet and http://<your-hostname> will allow you to access your server.

The default menu looks like this:

And the default footer like this:

Homepage content

For this tutorial consider DataSource to be just and example - we only show the title here anyway so you could do something like data_sources=["A","B","C"] to tryout the principle in your own application.

The core idea here is that setup_content_div will accept an asynchronous or normal function as a parameter and then call that to get the ui elements for your pages. This way the layout of all pages can be the same - just the content changes.

def setup_home(self):

"""

first load all data sources then

show a table of these

"""

msg="loading datasources ..."

self.loading_msg=ui.html(msg)

profiler=Profiler(msg,profile=False)

DataSource.getAll()

elapsed=profiler.time()

data_sources=DataSource.sources.values()

msg=f"{len(data_sources)} datasources loaded in {elapsed*1000:5.0f} msecs"

self.loading_msg.content=msg

for index,source in enumerate(data_sources,start=1):

ui.label(f"{index}:{source.title}")

pass

async def home(self, _client: Client):

"""

provide the main content page

"""

await(self.setup_content_div(self.setup_home))

Adding a RESTFul service

eventseries_api

Issue 59 - refactor eventseries RESTFul service to nicegui/FastAPI

Implementation

Note how we separate the concerns and have function to get the eventSeries as a dict of list of dicts and then a conversion to the different formats that is going to be used by the Webserver to provide the results in a RESTFul way.

import io

import re

import pandas as pd

from dataclasses import dataclass, asdict

from fastapi import Response

from fastapi.responses import JSONResponse,FileResponse

from tabulate import tabulate

from typing import List

from spreadsheet.spreadsheet import ExcelDocument

from corpus.lookup import CorpusLookup

from corpus.datasources.openresearch import OREvent, OREventSeries

from corpus.eventseriescompletion import EventSeriesCompletion

class EventSeriesAPI():

"""

API service for event series data

"""

def __init__(self,lookup:CorpusLookup):

'''

construct me

Args:

lookup

'''

self.lookup=lookup

def getEventSeries(self,name: str,bks:str=None,reduce:bool=False):

'''

Query multiple datasources for the given event series

Args:

name(str): the name of the event series to be queried

'''

multiQuery = "select * from {event}"

idQuery = f"""select source,eventId from event where lookupAcronym LIKE "{name} %" order by year desc"""

dictOfLod = self.lookup.getDictOfLod4MultiQuery(multiQuery, idQuery)

if bks:

allowedBks = bks.split(",") if bks else None

self.filterForBk(dictOfLod.get("tibkat"), allowedBks)

if reduce:

for source in ["tibkat", "dblp"]:

sourceRecords = dictOfLod.get(source)

if sourceRecords:

reducedRecords = EventSeriesCompletion.filterDuplicatesByTitle(sourceRecords)

dictOfLod[source] = reducedRecords

return dictOfLod

def filterForBk(self,lod:List[dict], allowedBks:List[str]):

"""

Filters the given dict to only include the records with their bk in the given list of allowed bks

Args:

lod: list of records to filter

allowedBks: list of allowed bks

"""

if lod is None or allowedBks is None:

return

mainclassBk = set()

subclassBk = set()

allowNullValue = False

for bk in allowedBks:

if bk.isnumeric():

mainclassBk.add(bk)

elif re.fullmatch(r"\d{1,2}\.\d{2}", bk):

subclassBk.add(bk)

elif bk.lower() == "null" or bk.lower() == "none":

allowNullValue = True

def filterBk(record:dict) -> bool:

keepRecord: bool = False

bks = record.get("bk")

if bks is not None:

bks = set(bks.split("⇹"))

recordMainclasses = {bk.split(".")[0] for bk in bks}

if mainclassBk.intersection(recordMainclasses) or subclassBk.intersection(bks):

keepRecord = True

elif bks is None and allowNullValue:

keepRecord = True

return keepRecord

lod[:] = [record for record in lod if filterBk(record)]

def generateSeriesSpreadsheet(self, name:str, dictOfLods: dict) -> ExcelDocument:

"""

Args:

name(str): name of the series

dictOfLods: records of the series from different sources

Returns:

ExcelDocument

"""

spreadsheet = ExcelDocument(name=name)

# Add completed event sheet and add proceedings sheet

eventHeader = [

"item",

"label",

"description",

"Ordinal",

"OrdinalStr",

"Acronym",

"Country",

"City",

"Title",

"Series",

"Year",

"Start date",

"End date",

"Homepage",

"dblp",

"dblpId",

"wikicfpId",

"gndId"]

proceedingsHeaders = ["item", "label", "ordinal", "ordinalStr", "description", "Title", "Acronym",

"OpenLibraryId", "oclcId", "isbn13", "ppnId", "gndId", "dblpId", "doi", "Event",

"publishedIn"]

eventRecords = []

for lod in dictOfLods.values():

eventRecords.extend(lod)

completedBlankEvent = EventSeriesCompletion.getCompletedBlankSeries(eventRecords)

eventSheetRecords = []

proceedingsRecords = []

for year, ordinal in completedBlankEvent:

eventSheetRecords.append({**{k: None for k in eventHeader}, "Ordinal": ordinal, "Year": year})

proceedingsRecords.append({**{k: None for k in proceedingsHeaders}, "ordinal": ordinal})

if not eventSheetRecords:

eventSheetRecords = [{k: None for k in eventHeader}]

proceedingsRecords = [{k: None for k in proceedingsHeaders}]

spreadsheet.addTable("Event", eventSheetRecords)

spreadsheet.addTable("Proceedings", proceedingsRecords)

for lods in [dictOfLods, asdict(MetadataMappings())]:

for sheetName, lod in lods.items():

if isinstance(lod, list):

lod.sort(key=lambda record: 0 if record.get('year',0) is None else record.get('year',0))

spreadsheet.addTable(sheetName, lod)

return spreadsheet

async def convertToRequestedFormat(self, name: str, dictOfLods: dict, markup_format: str = "json"):

"""

Converts the given dicts of lods to the requested markup format.

Supported formats: json, html, excel, pd_excel, various tabulate formats.

Default format: json

Args:

dictOfLods: data to be converted

Returns:

Response

"""

if markup_format.lower() == "excel":

# Custom Excel spreadsheet generation

spreadsheet = self.generateSeriesSpreadsheet(name, dictOfLods)

spreadsheet_io = io.BytesIO(spreadsheet.toBytesIO().getvalue()) # Ensure it's a BytesIO object

spreadsheet_io.seek(0)

return FileResponse(spreadsheet_io, media_type="application/vnd.ms-excel", filename=f"{name}.xlsx")

elif markup_format.lower() == "pd_excel":

# Pandas style Excel spreadsheet generation

df = pd.DataFrame.from_dict({k: v for lod in dictOfLods.values() for k, v in lod.items()})

excel_io = io.BytesIO()

with pd.ExcelWriter(excel_io, engine="xlsxwriter") as writer:

df.to_excel(writer, sheet_name=name)

excel_io.seek(0)

return FileResponse(excel_io, media_type="application/vnd.ms-excel", filename=f"{name}.xlsx")

elif markup_format.lower() == "json":

# Direct JSON response

return JSONResponse(content=dictOfLods)

else:

# Using tabulate for other formats (including HTML)

tabulated_content = tabulate([lod for lod in dictOfLods.values()], headers="keys", tablefmt=markup_format)

media_type = "text/plain" if markup_format.lower() != "html" else "text/html"

return Response(content=tabulated_content, media_type=media_type)

@dataclass

class MetadataMappings:

"""

Spreadsheet metadata mappings

"""

WikidataMapping: list = None

SmwMapping: list = None

def __init__(self):

self.WikidataMapping = [

{'Entity': 'Event series', 'Column':None, 'PropertyName': 'instanceof', 'PropertyId': 'P31', 'Value': 'Q47258130'},

{'Entity': 'Event series', 'Column': 'Acronym', 'PropertyName': 'short name', 'PropertyId': 'P1813', 'Type': 'text'},

{'Entity': 'Event series', 'Column': 'Title', 'PropertyName': 'title', 'PropertyId': 'P1476', 'Type': 'text'},

{'Entity': 'Event series', 'Column': 'Homepage', 'PropertyName': 'official website', 'PropertyId': 'P856', 'Type': 'url'},

{'Entity': 'Event', 'Column':None, 'PropertyName': 'instanceof', 'PropertyId': 'P31', 'Value': 'Q2020153'},

{'Entity': 'Event', 'Column': 'Series', 'PropertyName': 'part of the series', 'PropertyId': 'P179'},

{'Entity': 'Event', 'Column': 'Ordinal', 'PropertyName': 'series ordinal', 'PropertyId': 'P1545', 'Type': 'string', 'Qualifier': 'part of the series'},

{'Entity': 'Event', 'Column': 'Acronym', 'PropertyName': 'short name', 'PropertyId': 'P1813', 'Type': 'text'},

{'Entity': 'Event', 'Column': 'Title', 'PropertyName': 'title', 'PropertyId': 'P1476', 'Type': 'text'},

{'Entity': 'Event', 'Column': 'Country', 'PropertyName': 'country', 'PropertyId': 'P17', 'Lookup': 'Q3624078'},

{'Entity': 'Event', 'Column': 'City', 'PropertyName': 'location', 'PropertyId': 'P276', 'Lookup': 'Q515'},

{'Entity': 'Event', 'Column': 'Start date', 'PropertyName': 'start time', 'PropertyId': 'P580', 'Type': 'date'},

{'Entity': 'Event', 'Column': 'End date', 'PropertyName': 'end time', 'PropertyId': 'P582', 'Type': 'date'},

{'Entity': 'Event', 'Column': 'gndId', 'PropertyName': 'GND ID', 'PropertyId': 'P227', 'Type': 'extid'},

{'Entity': 'Event', 'Column': 'dblpUrl', 'PropertyName': 'describedAt', 'PropertyId': 'P973', 'Type': 'url'},

{'Entity': 'Event', 'Column': 'Homepage', 'PropertyName': 'official website', 'PropertyId': 'P856', 'Type': 'url'},

{'Entity': 'Event', 'Column': 'wikicfpId', 'PropertyName': 'WikiCFP event ID', 'PropertyId': 'P5124', 'Type': 'extid'},

{'Entity': 'Event', 'Column': 'dblpId', 'PropertyName': 'DBLP event ID', 'PropertyId': 'P10692', 'Type': 'extid'},

{'Entity': 'Proceedings', 'Column':None, 'PropertyName': 'instanceof', 'PropertyId': 'P31', 'Value': 'Q1143604'},

{'Entity': 'Proceedings', 'Column': 'Acronym', 'PropertyName': 'short name', 'PropertyId': 'P1813', 'Type': 'text'},

{'Entity': 'Proceedings', 'Column': 'Title', 'PropertyName': 'title', 'PropertyId': 'P1476', 'Type': 'text'},

{'Entity': 'Proceedings', 'Column': 'OpenLibraryId', 'PropertyName': 'Open Library ID', 'PropertyId': 'P648', 'Type': 'extid'},

{'Entity': 'Proceedings', 'Column': 'ppnId', 'PropertyName': 'K10plus PPN ID', 'PropertyId': 'P6721', 'Type': 'extid'},

{'Entity': 'Proceedings', 'Column': 'Event', 'PropertyName': 'is proceedings from', 'PropertyId': 'P4745', 'Lookup': 'Q2020153'},

{'Entity': 'Proceedings', 'Column': 'publishedIn', 'PropertyName': 'published in', 'PropertyId': 'P1433', 'Lookup': 'Q39725049'},

{'Entity': 'Proceedings', 'Column': 'oclcId', 'PropertyName': 'OCLC work ID','PropertyId': 'P5331', 'Type': 'extid'},

{'Entity': 'Proceedings', 'Column': 'isbn13', 'PropertyName': 'ISBN-13', 'PropertyId': 'P212', 'Type': 'extid'},

{'Entity': 'Proceedings', 'Column': 'doi', 'PropertyName': 'DOI', 'PropertyId': 'P356', 'Type': 'extid'},

{'Entity': 'Proceedings', 'Column': 'dblpId', 'PropertyName': 'DBLP event ID', 'PropertyId': 'P10692', 'Type': 'extid'},

]

self.SmwMapping = [

*[{"Entity":"Event",

"Column":r.get("templateParam"),

"PropertyName":r.get("name"),

"PropertyId":r.get("prop"),

"TemplateParam": r.get("templateParam")

} for r in OREvent.propertyLookupList

],

*[{"Entity": "Event series",

"Column": r.get("templateParam"),

"PropertyName": r.get("name"),

"PropertyId": r.get("prop"),

"TemplateParam": r.get("templateParam")

} for r in OREventSeries.propertyLookupList

]

]

Test for event_series_api

import json

from dataclasses import asdict

from spreadsheet.googlesheet import GoogleSheet

from corpus.web.eventseries import MetadataMappings, EventSeriesAPI

from tests.datasourcetoolbox import DataSourceTest

from corpus.lookup import CorpusLookup

class TestEventSeriesAPI(DataSourceTest):

"""

tests EventSeriesBlueprint

"""

@classmethod

def setUpClass(cls)->None:

super(TestEventSeriesAPI, cls).setUpClass()

cls.lookup=CorpusLookup()

def setUp(self, debug=False, profile=True, timeLimitPerTest=10.0):

DataSourceTest.setUp(self, debug=debug, profile=profile, timeLimitPerTest=timeLimitPerTest)

self.lookup=TestEventSeriesAPI.lookup

def testLookup(self):

"""

check the lookup

"""

self.assertTrue(self.lookup is not None)

debug=self.debug

datasource_count=len(self.lookup.eventCorpus.eventDataSources)

if debug:

print(f"found {datasource_count} datasources")

self.assertTrue(datasource_count>3)

def test_extractWikidataMapping(self):

"""

extracts wikidata metadata mapping from given google docs url

"""

url = "https://docs.google.com/spreadsheets/d/1-6llZSTVxNrYH4HJ0DotMjVu9cTHv2WnT_thfQ-3q14"

gs = GoogleSheet(url)

gs.open(["Wikidata"])

lod=gs.asListOfDicts("Wikidata")

debug=self.debug

#debug=True

if debug:

print(f"found {len(lod)} events")

print(json.dumps(lod,indent=2))

self.assertEqual(23,len(lod))

pass

#sheet = [{k:v for k,v in record.items() if (not k.startswith("Unnamed")) and v is not ''} for record in ]

#print(sheet)

def test_MetadataMapping(self):

"""

test the metadata mapping

"""

mapping = MetadataMappings()

debug=self.debug

#debug=True

mapping_dict=asdict(mapping)

if debug:

print(json.dumps(mapping_dict,indent=2))

self.assertTrue("WikidataMapping" in mapping_dict)

def testGetEventSeries(self):

'''

tests the multiquerying of event series over api

some 17 secs for test

'''

es_api=EventSeriesAPI(self.lookup)

dict_of_lods=es_api.getEventSeries(name="WEBIST")

debug=self.debug

#debug=True

if debug:

print(json.dumps(dict_of_lods,indent=2,default=str))

self.assertTrue("confref" in dict_of_lods)

self.assertTrue(len(dict_of_lods["confref"]) > 15)

def test_getEventSeriesBkFilter(self):

"""

tests getEventSeries bk filter

see https://github.com/WolfgangFahl/ConferenceCorpus/issues/55

"""

bks_list = ["85.20", "54.65,54.84", "54.84,85"]

es_api=EventSeriesAPI(self.lookup)

for bks in bks_list:

res=es_api.getEventSeries(name="WEBIST", bks=bks)

self.assertIn("tibkat", res)

bksPerRecord = [record.get("bk") for record in res.get("tibkat")]

expectedBks = set(bks.split(","))

for rawBks in bksPerRecord:

self.assertIsNotNone(rawBks)

bks = set(rawBks.split("⇹"))

bks = bks.union({bk.split(".")[0] for bk in bks})

self.assertTrue(bks.intersection(expectedBks))

def test_filterForBk(self):

"""

tests filterForBk

"""

testMatrix = [

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["54"], 3),

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["54","85"], 4),

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["85.20"], 4),

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["02"], 0),

(['54.84⇹85.20', '54.84⇹85.20', '54.84⇹81.68⇹85.20⇹88.03⇹54.65', '85.20'], ["81.68","88.03"], 1),

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["54"], 2),

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["54","null"], 3),

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["null"], 1),

(['54.84⇹85.20', '54.84⇹85.20', None, '85.20'], ["none"], 1),

]

es_api=EventSeriesAPI(self.lookup)

for recordData, bkFilter, expectedNumberOfRecords in testMatrix:

lod = [{"bk":bk} for bk in recordData]

es_api.filterForBk(lod, bkFilter)

self.assertEqual(len(lod), expectedNumberOfRecords, f"Tried to filter for {bkFilter} and expected {expectedNumberOfRecords} but filter left {len(lod)} in the list")

def testTibkatReducingRecords(self):

"""

tests deduplication of the tibkat records if reduce parameter is set

"""

name="AAAI"

expected={

False: 400,

True: 50

}

es_api=EventSeriesAPI(lookup=self.lookup)

for reduce in (True,False):

with self.subTest(msg=f"Testing with reduce={reduce}", testParam=reduce):

res=es_api.getEventSeries(name=name, reduce=reduce)

self.assertIn("tibkat", res)

tibkat_count=len(res.get("tibkat"))

should=expected[reduce]<=tibkat_count

if reduce:

should=not should

self.assertTrue(should)

Test Result

ConferenceCorpus % scripts/test --venv -tn test_eventseries_api

No new changes in tests or corpus directories.

Starting test testGetEventSeries ... with debug=False ...

test testGetEventSeries ... with debug=False took 8.5 s

.Starting test testLookup ... with debug=False ...

test testLookup ... with debug=False took 0.0 s

.Starting test testTibkatReducingRecords ... with debug=False ...

test testTibkatReducingRecords ... with debug=False took 5.2 s

.Starting test test_MetadataMapping ... with debug=False ...

test test_MetadataMapping ... with debug=False took 0.0 s

.Starting test test_extractWikidataMapping ... with debug=False ...

test test_extractWikidataMapping ... with debug=False took 0.5 s

.Starting test test_filterForBk ... with debug=False ...

test test_filterForBk ... with debug=False took 0.0 s

.Starting test test_getEventSeriesBkFilter ... with debug=False ...

test test_getEventSeriesBkFilter ... with debug=False took 2.9 s

.

----------------------------------------------------------------------

Ran 7 tests in 17.202s

OK

Test first

scripts/test

There is scripts/test script that has runs the python unit tests from the test directory:

Usage

Usage: scripts/test [OPTIONS]

Options:

-b, --background Run tests in the background and log output.

-d, --debug Show environment for debugging.

-g, --green Run tests using the green test runner.

-h, --help Display this help message.

-p, --python Specify the Python interpreter to use.

-tn, --test_name Run only the specified test module.

--venv Use a virtual environment for testing.

Example:

scripts/test --python python3.10 --background

Running a single test

Below is a call that runs a single test in a venv environment

scripts/test --venv -tn testPainScale

No new changes in tests or corpus directories.

Starting test testPainImages ... with debug=False ...

test testPainImages ... with debug=False took 0.7 s

.

----------------------------------------------------------------------

Ran 1 test in 0.713s

OK

Example Test result

Ran 161 tests in 1041.419s

FAILED (errors=18, skipped=8)

Continuous Integration

github workflows

We use github workflows for our CI with two workflow yaml files:

- build.yml

- upload-to-pypi.yml

build

The build workflow uses a matrix of python versions and operating systems. During the migration phase we only use Ubuntu and Python 3.10 to speed up the actions handling. In our projects actions list we can see wether our CI works or still fails:

upload-to-pypi

Apache Server installation

To serve from an Apache Server we need to start the service (e.g. on reboot) and make it available via an apache configuration. We also have to make sure the service is available on the internet and findable by the DNS system.

DNS entry

My services are hoste via hosteurope where i can access my DNS entries via its KIS system

By adding cc2 as a cname for on.bitplan.com http://cc2.bitplan.com now points to http://on.bitplan.com

![]()

apache configuration

Our server is an Ubuntu 22.04 LTS machine. The configuration is at

/etc/apache2/sites-available/conferencecorpus.conf

- Line 9: `ServerName cc2.bitplan.com`

- This sets the address for this virtual server. It's like telling the server, "Use this setting for the website named 'cc2.bitplan.com'."

- Lines 20-21: WebSockets Handling

- These lines help redirect websocket requests for reactive interactions by NiceGUI.

- Lines 30, 33, 36: `ProxyPassReverse / http://localhost:5005/`

- This helps keep the website's links working correctly when using tools like NiceGUI.

- It makes sure that any responses from the server seem like they are coming directly from the website, even though they're actually handled by a separate tool (NiceGUI) running in the background locally.

- Log Directives: `ErrorLog` and `CustomLog`

- These are for recording what happens on the server.

- `ErrorLog` keeps track of any problems, while `CustomLog` records all visits and activities. This is important for fixing issues and understanding how people use the website.

conferencecorpus.conf

<VirtualHost *:80 >

# The ServerName directive sets the request scheme, hostname and port that

# the server uses to identify itself. This is used when creating

# redirection URLs. In the context of virtual hosts, the ServerName

# specifies what hostname must appear in the request's Host: header to

# match this virtual host. For the default virtual host (this file) this

# value is not decisive as it is used as a last resort host regardless.

# However, you must set it for any further virtual host explicitly.

ServerName cc2.bitplan.com

ServerAdmin webmaster@bitplan.com

#DocumentRoot /var/www/html

# Available loglevels: trace8, ..., trace1, debug, info, notice, warn,

# error, crit, alert, emerg.

# It is also possible to configure the loglevel for particular

# modules, e.g.

#LogLevel info ssl:warn

ErrorLog ${APACHE_LOG_DIR}/cc_error.log

CustomLog ${APACHE_LOG_DIR}/cc.log combined

# For most configuration files from conf-available/, which are

# enabled or disabled at a global level, it is possible to

# include a line for only one particular virtual host. For example the

# following line enables the CGI configuration for this host only

# after it has been globally disabled with "a2disconf".

#Include conf-available/serve-cgi-bin.conf

RewriteEngine On

RewriteCond %{HTTP:Upgrade} =websocket [NC]

RewriteRule /(.*) ws://localhost:5005/$1 [P,L]

RewriteCond %{HTTP:Upgrade} !=websocket [NC]

RewriteRule /(.*) http://localhost:5005/$1 [P,L]

# make local Conference Corpus webserver available

ProxyPassReverse / http://localhost:5005/

</VirtualHost>

enabling the website

sudo a2ensite conferencecorpus

Enabling site conferencecorpus.

To activate the new configuration, you need to run:

systemctl reload apache2

# we do a complete restart for good measure

service apache2 restart

The server should now in principle accessible at http://cc2.bitplan.com but will responde with HTML Code 503 Service Unavailable until we start the webservice

crontab entry

We restart our servers on reboot and every night. You need to adapt your contrab entries to your username

crontab -l | tail -4

# run startup on reboot

@reboot /home/<user>/bin/startup --all

# and every early morning 02:30 h

30 02 * * * /home/<user>/bin/startup --all

startup script

The startup script manages Python services, with error handling (`error`) and usage info (`usage`). This example only shows the single service ConferenceCorpus - in our context we have lots of services that are started this way on reboot or demand.

- Updates projects from Git (`update`) and manages logs (`prepare_logging`).

- `start_python_service` kills existing instances before restarting.

- Operates on command-line options, with ANSI color-coded output.

startup

#!/bin/bash

# WF 2023-02-25

# startup jobs for Conference Corpus

# ansi colors

blue='\033[0;34m'

red='\033[0;31m'

green='\033[0;32m'

endColor='\033[0m'

# a colored message

color_msg() {

local l_color="$1"

local l_msg="$2"

echo -e "${l_color}$l_msg${endColor}"

}

# error

error() {

local l_msg="$1"

color_msg $red "Error:" 1>&2

color_msg $red "\t$l_msg" 1>&2

exit 1

}

# show usage

usage() {

echo "Usage: $0 [options]"

echo "Options:"

echo "-h |--help: Show this message"

echo "--cc: Start Conference Corpus"

exit 1

}

# start the given python service

start_python_service() {

local l_name=$1

local l_giturl=$2

local l_cmd=$3

local l_cmd_proc="$4"

local l_log=$(prepare_logging $l_name)

update $l_name $l_giturl true

background_service "$l_cmd" "$l_cmd_proc" $l_log

log "log is at $l_log"

}

# background service

background_service() {

local l_cmd="$1"

local l_cmd_proc="$2"

local l_log="$3"

pgrep -fla "$l_cmd_proc"

if [ $? -eq 0 ]

then

pkill -f "$l_cmd_proc"

fi

nohup $l_cmd > "$l_log" 2>&1 &

}

# update the given python project

update() {

local l_name=$1

local l_giturl=$2

local l_do_update=$3

local l_srcroot=$HOME/source/python

local l_service_root=$l_srcroot/$l_name

if [ ! -d $l_srcroot ]

then

mkdir -p $l_srcroot

fi

cd $l_srcroot

if [ ! -d $l_service_root ]

then

git clone $l_giturl $l_name

fi

cd $l_service_root

if [ "$l_do_update" == "true" ]

then

git pull

python -m venv .venv

source .venv/bin/activate

pip install --upgrade pip

scripts/install

fi

}

# prepare logging for the given service

prepare_logging() {

local l_name=$1

local l_logdir=/var/log/$l_name

local l_log=$l_logdir/$l_name.log

local l_idn=$(id -un)

local l_idg=$(id -gn)

if [ ! -d $l_logdir ]

then

sudo mkdir -p $l_logdir

fi

sudo chown $l_idn.$l_idg $l_logdir

sudo touch $l_log

sudo chown $l_idn.$l_idg $l_log

echo "$l_log"

}

# start Conference Corpus

start_conference_corpus() {

start_python_service cc https://github.com/WolfgangFahl/ConferenceCorpus "ccServer --serve" "ccServer --serve"

}

cd $HOME

. .profile

verbose=true

if [ $# -lt 1 ]

then

usage

else

while [ "$1" != "" ]

do

option="$1"

case $option in

"-h"|"--help")

usage

;;

"--cc")

start_conference_corpus

;;

"--all")

start_conference_corpus

;;

esac

shift

done

fi

usage

Usage: startup [options]

Options:

-h |--help: Show this message

--cc: Start Conference Corpus

--all: Start all services