Windows[edit]

If you use Windows you might be out of luck for quite a few scripts and tools being mentioned here. For best results you might want to install an environment that is capable of handling these scripts and tools such as a Docker or the Windows Subsystem for Linux WSL. See e.g. Ubuntu in WSL for more details.

Linux[edit]

Example Usecase[edit]

This tutorial uses the ConferenceCorpus as it's usecase. As part of my research for the ConfIDent Project i am gathering metadata for scientific events from different datasources. There has been a lack of a proper frontend for the past few years although there have been several attempts to create a webserver and RESTFul services e.g. with

As of 2023-11 the project is now migrated to nicegui using the nicegui widgets (demo)

The github ticket for the migration is Issue 58 - refactor to use nicegui

Setting up the project[edit]

The project is on github see ConferenceCorpus

README[edit]

A GitHub README is a document that introduces and explains a project hosted on GitHub, providing essential information such as its purpose, how to install and use it, and its features. It is important because it serves as the first point of contact for anyone encountering the project, helping them understand what the project is about and how to engage with it.

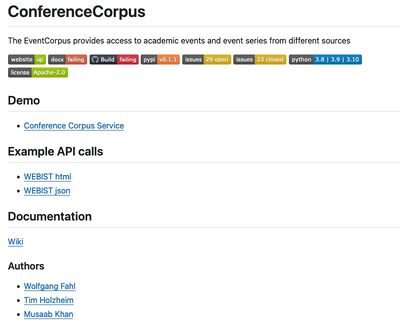

The README consists of

- header

- badges

- link to demo

- example API calls

- links to documentation

- authors list

README[edit]

A GitHub README is a document that introduces and explains a project hosted on GitHub, providing essential information such as its purpose, how to install and use it, and its features. It is important because it serves as the first point of contact for anyone encountering the project, helping them understand what the project is about and how to engage with it.

The README consists of

- header

- badges

- link to demo

- example API calls

- links to documentation

- authors list

README screenshot as of start of migration[edit]

License[edit]

The LICENSE file in a software project specifies the legal terms under which the software can be used, modified, and shared, defining the rights and restrictions for users and developers. Using the Apache License is a good choice for many projects because it is a permissive open-source license that allows for broad freedom in use and distribution, while also providing legal protection against patent claims and requiring preservation of copyright notices.

pyproject.toml[edit]

pyproject.toml is a configuration file used in Python projects to define project metadata, dependencies,

and build system requirements, providing a consistent and standardized way to configure Python projects.

For more information, see pyproject.toml documentation.

Hatchling is a popular choice for a build system because it offers a modern, fast, and straightforward approach

to building and managing Python projects, aligning well with the standardized structure provided by

pyproject.toml. Learn more about Hatchling at Hatchling on GitHub.

This pyproject.toml file configures the Python project 'ConferenceCorpus', an API for accessing academic events and event series from different sources.

Build System[edit]

The [build-system] section defines the build requirements and backend. It specifies the use of 'hatchling' as the build backend:

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

Hatchling is a modern build backend for Python projects, aligning with PEP 517.

Project Metadata[edit]

Under the [project] section, several key details about the project are listed:

[project]

name = "ConferenceCorpus"

description = "python api providing access to academic events and event series from different sources"

home-page = "http://wiki.bitplan.com/index.php/ConferenceCorpus"

readme = "README.md"

license = {text = "Apache-2.0"}

authors = [{name = "Wolfgang Fahl", email = "wf@WolfgangFahl.com"}]

maintainers = [{name = "Wolfgang Fahl", email = "wf@WolfgangFahl.com"}]

requires-python = ">=3.9"

classifiers = ['Programming Language :: Python', ...]

dynamic = ["version"]

This section sets the project's name, description, homepage, README, license, authors, maintainers, required Python version, classifiers, and dynamic versioning.

Dependencies[edit]

The dependencies section lists the required external libraries for the project to function:

dependencies = [

"pylodstorage>=0.4.9",

...

]

These dependencies ensure that the necessary packages are installed for the project.

Project Scripts[edit]

The [project.scripts] section defines executable commands for various functionalities, with a focus on the `ccServer` script for starting the web server:

[project.scripts]

ccServer = "corpus.web.cc_cmd:main"

...

Understanding the ccServer Script[edit]

- Command:

ccServeris the command that users will run in the terminal. - Function: The value

"corpus.web.cc_cmd:main"specifies the Python function that gets executed when `ccServer` is invoked.- Package and Module: The first part,

corpus.web.cc_cmd, is the Python package and module where the function resides. - Function Name: The second part,

main, is the name of the function within that module.

- Package and Module: The first part,

Usage[edit]

- To start the webserver, a user would execute the following command in the terminal:

$ ccServer

- This command calls the `main` function from the `cc_cmd.py` file located in the `corpus.web` package, effectively initiating the web server for the ConferenceCorpus project.

Project URLs[edit]

The [project.urls] section provides links to the project's home, source, and documentation:

[project.urls]

Home = "http://wiki.bitplan.com/index.php/ConferenceCorpus"

Source = "https://github.com/WolfgangFahl/ConferenceCorpus"

Documentation = "https://github.com/WolfgangFahl/ConferenceCorpus/issues"

These URLs direct users to relevant resources for the project.

Command line[edit]

Thanks to niceguiwidget's WebserverCmd base class a command line startup python script only needs a few lines:

'''

Created on 2023-09-10

@author: wf

'''

import sys

from ngwidgets.cmd import WebserverCmd

from corpus.web.cc_webserver import ConferenceCorpusWebserver

class ConferenceCorpusCmd(WebserverCmd):

"""

command line handling for Conference Corpus Webserver

"""

def main(argv:list=None):

"""

main call

"""

cmd=ConferenceCorpusCmd(config=ConferenceCorpusWebserver.get_config(),webserver_cls=ConferenceCorpusWebserver)

exit_code=cmd.cmd_main(argv)

return exit_code

DEBUG = 0

if __name__ == "__main__":

if DEBUG:

sys.argv.append("-d")

sys.exit(main())

Usage[edit]

ccServer -h

usage: ccServer [-h] [-a] [-c] [-d] [--debugServer DEBUGSERVER]

[--debugPort DEBUGPORT] [--debugRemotePath DEBUGREMOTEPATH]

[--debugLocalPath DEBUGLOCALPATH] [-l] [-i INPUT] [-rol]

[--host HOST] [--port PORT] [-s] [-V]

Conference Corpus Volume browser

options:

-h, --help show this help message and exit

-a, --about show about info [default: False]

-c, --client start client [default: False]

-d, --debug show debug info [default: False]

--debugServer DEBUGSERVER

remote debug Server

--debugPort DEBUGPORT

remote debug Port

--debugRemotePath DEBUGREMOTEPATH

remote debug Server path mapping - remotePath - path

on debug server

--debugLocalPath DEBUGLOCALPATH

remote debug Server path mapping - localPath - path on

machine where python runs

-l, --local run with local file system access [default: False]

-i INPUT, --input INPUT

input file

-rol, --render_on_load

render on load [default: False]

--host HOST the host to serve / listen from [default: localhost]

--port PORT the port to serve from [default: 5005]

-s, --serve start webserver [default: False]

-V, --version show program's version number and exit