WikiData Import 2020-07-15

Jump to navigation

Jump to search

see also Get_your_own_copy_of_WikiData - this was the fifth attempt after four failures. The main success factor was to use a 4 TB SSD disk which was kindly supplied by the ConfIDent project

Environment

- Mac Pro Mid 2010

- 12 core 3.46 GHz

- 64 GB RAM

- macOS High Sierra 10.13.6

- Source Disk: 4 TB 7200 rpm hard disk WD Gold WDC WD4002FYYZ Blackmagic speed rating: 175 MB/s write 175 MB/s read

- Target Disk: 4 TB SSD Samsung 860 EVO Blackmagic speed rating: 257 MB/s write 270 MB/s read

java -version openjdk version "11.0.5" 2019-10-15 OpenJDK Runtime Environment AdoptOpenJDK (build 11.0.5+10) OpenJDK 64-Bit Server VM AdoptOpenJDK (build 11.0.5+10, mixed mode)

Summary

- trying to replicate success story of https://issues.apache.org/jira/projects/JENA/issues/JENA-1909

- download of 24 GB took some 90 min

- unzipping to to some 670 GB took some 4 h 30 min

- Import took 4 d 14 h

- resulting TDB store is 700 GB

Import Timing

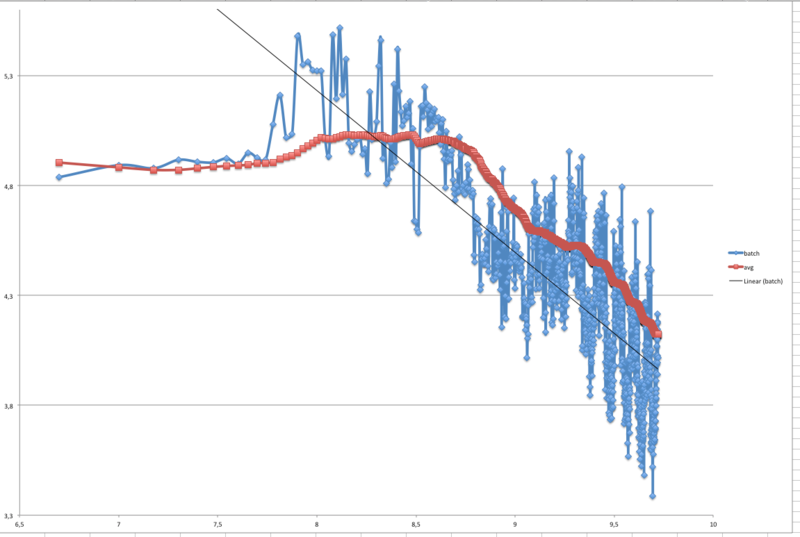

The diagram shows the number of triples imported versus number of triples per batch/avg. The x and y scales are both logarithmic.

Even with an SSD harddisk there is a logarithmic degradation of import speed.

Test Query

SELECT (COUNT(*) as ?Triples) WHERE { ?s ?p ?o}

result

"5250681892"^^xsd:integer

log for query

14:51:11 INFO Fuseki :: [7] POST http://localhost:3030/wikidata

14:51:11 INFO Fuseki :: [7] Query = SELECT (COUNT(*) as ?Triples) WHERE { ?s ?p ?o}

16:23:07 INFO Fuseki :: [7] 200 OK (5.516,224 s)

same query on a copy / rotating disk

06:34:25 INFO Server :: Started 2020/07/30 06:34:25 CEST on port 3030

06:34:41 INFO Fuseki :: [5] POST http://jena.bitplan.com/wikidata

06:34:41 INFO Fuseki :: [5] Query = SELECT (COUNT(*) as ?Triples) WHERE { ?s ?p ?o}

15:19:43 INFO Fuseki :: [5] 200 OK (31,501.769 s)

Download and unpack

This download was done with the "truthy" dataset.

wget https://dumps.wikimedia.org/wikidatawiki/entities/latest-truthy.nt.bz2

--2020-07-15 15:24:25-- https://dumps.wikimedia.org/wikidatawiki/entities/latest-truthy.nt.bz2

Resolving dumps.wikimedia.org (dumps.wikimedia.org)... 2620::861:1:208:80:154:7, 208.80.154.7

Connecting to dumps.wikimedia.org (dumps.wikimedia.org)|2620::861:1:208:80:154:7|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 25868964531 (24G) [application/octet-stream]

Saving to: ‘latest-truthy.nt.bz2’

latest-truthy.nt.bz 2%[ ] 546.01M 4.66MB/s eta 85m 57s

ls -l latest-truthy.nt.bz2

-rw-r--r-- 1 wf admin 25868964531 Jul 11 23:33 latest-truthy.nt.bz2

bzip2 -dk latest-truthy.nt.bz2

ls -l latest-truthy.nt

-rw------- 1 wf admin 671598919680 Jul 15 21:15 latest-truthy.nt

zeus:wikidata wf$ls -l latest-truthy.nt

-rw-r--r-- 1 wf admin 671749317281 Jul 11 23:33 latest-truthy.nt

start and progress

nohup ./wikidata2jena&

tail -f tdb2-err.log

21:16:57 INFO loader :: Loader = LoaderParallel

21:16:57 INFO loader :: Start: latest-truthy.nt

21:17:00 INFO loader :: Add: 500.000 latest-truthy.nt (Batch: 151.883 / Avg: 151.883)

21:17:02 INFO loader :: Add: 1.000.000 latest-truthy.nt (Batch: 243.309 / Avg: 187.020)

...

21:33:21 INFO loader :: Add: 100.000.000 latest-truthy.nt (Batch: 209.292 / Avg: 101.592)

21:33:21 INFO loader :: Elapsed: 984,33 seconds [2020/07/15 21:33:21 MESZ]

...

22:41:36 INFO loader :: Add: 500.000.000 latest-truthy.nt (Batch: 54.153 / Avg: 98.446)

22:41:36 INFO loader :: Elapsed: 5.078,89 seconds [2020/07/15 22:41:36 MESZ]

...

02:55:36 INFO loader :: Add: 1.000.000.000 latest-truthy.nt (Batch: 21.504 / Avg: 49.215)

02:55:36 INFO loader :: Elapsed: 20.318,94 seconds [2020/07/16 02:55:36 MESZ]

...

13:47:17 INFO loader :: Add: 2.000.000.000 latest-truthy.nt (Batch: 32.036 / Avg: 33.658)

13:47:17 INFO loader :: Elapsed: 59.420,03 seconds [2020/07/16 13:47:17 MESZ]

...

06:10:12 INFO loader :: Add: 3.000.000.000 latest-truthy.nt (Batch: 10.900 / Avg: 25.338)

06:10:13 INFO loader :: Elapsed: 118.395,31 seconds [2020/07/17 06:10:12 MESZ]

...

09:33:14 INFO loader :: Add: 4.000.000.000 latest-truthy.nt (Batch: 11.790 / Avg: 18.435)

09:33:14 INFO loader :: Elapsed: 216.976,77 seconds [2020/07/18 09:33:14 MESZ]

...

00:55:21 INFO loader :: Add: 5.000.000.000 latest-truthy.nt (Batch: 4.551 / Avg: 13.939)

00:55:21 INFO loader :: Elapsed: 358.703,75 seconds [2020/07/20 00:55:21 MESZ]

...

11:02:06 INFO loader :: Add: 5.253.500.000 latest-truthy.nt (Batch: 10.555 / Avg: 13.296)

11:02:38 INFO loader :: Finished: latest-truthy.nt: 5.253.753.313 tuples in 395140,91s (Avg: 13.295)

11:05:27 INFO loader :: Finish - index SPO

11:08:24 INFO loader :: Finish - index POS

11:08:59 INFO loader :: Finish - index OSP

11:08:59 INFO loader :: Time = 395.522,378 seconds : Triples = 5.253.753.313 : Rate = 13.283 /s

tail -f nohup.out

x apache-jena-3.16.0/lib-src/jena-arq-3.16.0-sources.jar

x apache-jena-3.16.0/lib-src/jena-core-3.16.0-sources.jar

x apache-jena-3.16.0/lib-src/jena-tdb-3.16.0-sources.jar

x apache-jena-3.16.0/lib-src/jena-rdfconnection-3.16.0-sources.jar

x apache-jena-3.16.0/lib-src/jena-shacl-3.16.0-sources.jar

x apache-jena-3.16.0/lib-src/jena-cmds-3.16.0-sources.jar

creating /Volumes/Torterra/wikidata/data directory

creating temporary directory /Volumes/Torterra/wikidata/tmp

start loading latest-truthy.nt to /Volumes/Torterra/wikidata/data at 2020-07-15T19:16:54Z

finished loading latest-truthy.nt to /Volumes/Torterra/wikidata/data at 2020-07-20T09:09:04Z

Trivia

Scripts

wikidata2jena

#!/bin/bash

# WF 2020-05-10

# global settings

jena=apache-jena-3.16.0

tgz=$jena.tar.gz

jenaurl=http://mirror.easyname.ch/apache/jena/binaries/$tgz

base=/Volumes/Torterra/wikidata

data=$base/data

tdbloader=$jena/bin/tdb2.tdbloader

getjena() {

# download

if [ ! -f $tgz ]

then

echo "downloading $tgz from $jenaurl"

wget $jenaurl

else

echo "$tgz already downloaded"

fi

# unpack

if [ ! -d $jena ]

then

echo "unpacking $jena from $tgz"

tar xvzf $tgz

else

echo "$jena already unpacked"

fi

# create data directory

if [ ! -d $data ]

then

echo "creating $data directory"

mkdir -p $data

else

echo "$data directory already created"

fi

}

#

# show the given timestamp

#

timestamp() {

local msg="$1"

local ts=$(date -u +"%Y-%m-%dT%H:%M:%SZ")

echo "$msg at $ts"

}

#

# load data for the given data dir and input

#

loaddata() {

local data="$1"

local input="$2"

timestamp "start loading $input to $data"

$tdbloader --loader=parallel --loc "$data" "$input" > tdb2-out.log 2> tdb2-err.log

timestamp "finished loading $input to $data"

}

getjena

export TMPDIR=$base/tmp

if [ ! -d $TMPDIR ]

then

echo "creating temporary directory $TMPDIR"

mkdir $TMPDIR

else

echo "using temporary directory $TMPDIR"

fi

loaddata $data latest-truthy.nt

fuseki

This scripts starts a Fuseki Server and makes it available for Querying the wikidata set imported

#!/bin/bash

# WF 2020-06-25

# Jena Fuseki server installation

# see https://jena.apache.org/documentation/fuseki2/fuseki-run.html

version=3.16.0

fuseki=apache-jena-fuseki-$version

if [ ! -d $fuseki ]

then

if [ ! -f $fuseki.tar.gz ]

then

wget http://archive.apache.org/dist/jena/binaries/$fuseki.tar.gz

else

echo $fuseki.tar.gz already downloaded

fi

echo "unpacking $fuseki.tar.gz"

tar xvfz $fuseki.tar.gz

else

echo $fuseki already downloaded and unpacked

fi

cd $fuseki

java -jar fuseki-server.jar --tdb2 --loc=../data /wikidata

log2csv

This script extracts the import progress from the import log to be able to analyze it e.g. with a spreadsheet software as used for the diagram above.

#!/bin/bash

# create a CSV file from the loader log to analyze import speed

# degradation

# 2020-07-16 WF

target=capri:/bitplan/Projekte/2020/ConfIDent/wikidata/import

for log in tdb2-err.log

do

csv=$(echo $log | sed 's/.log/.csv/')

echo "extracting $csv from $log"

# Example

# 07:33:45 INFO loader :: Add: 1.420.000.000 latest-truthy.nt (Batch: 54.036 / Avg: 38.369)

# 07:33:45 INFO loader :: Elapsed: 37.008,24 seconds [2020/07/16 07:33:45 MESZ]

grep Elapsed -B1 $log | awk '

BEGIN {

print ("elapsed;add;batch;avg")

}

/Add:/ {

add=$6

batch=$9

avg=$12

gsub ("\\)","",avg)

#print add

#print batch

#print avg

next

}

/Elapsed:/ {

elapsed=$6

printf("%s;%s;%s;%s\n",elapsed,add,batch,avg)

}

' > $csv

done

scp tdb2-err.csv $target/importStats2020-07.csv