Difference between revisions of "Snapquery"

Jump to navigation

Jump to search

(→Usage) |

|||

| (17 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

|title=snapquery | |title=snapquery | ||

|url=https://github.com/WolfgangFahl/snapquery | |url=https://github.com/WolfgangFahl/snapquery | ||

| − | |version=0. | + | |version=0.2.3 |

|description=Just Query wikidata and other SPARQL endpoints by qury name - a frontend to Introduce Named Queries and Named Query Middleware to wikidata and other SPARQL endpoints | |description=Just Query wikidata and other SPARQL endpoints by qury name - a frontend to Introduce Named Queries and Named Query Middleware to wikidata and other SPARQL endpoints | ||

| − | |date= | + | |date=2025-12-04 |

|since=2024-05-03 | |since=2024-05-03 | ||

}} | }} | ||

| + | |||

= Motivation = | = Motivation = | ||

Querying Knowledge Graphs such as wikidata that are based on {{Link|target=SPARQL}} is too complex for mere mortals. | Querying Knowledge Graphs such as wikidata that are based on {{Link|target=SPARQL}} is too complex for mere mortals. | ||

| Line 26: | Line 27: | ||

== Web == | == Web == | ||

=== nominate === | === nominate === | ||

| − | To nominate a query you need to first identify | + | To nominate a query, you need to first identify yourself with a persistent identifier (PID) such as: |

| − | * ORCID e.g. [https://orcid.org/0000-0003-1279-3709 0000-0003-1279-3709 (Tim Berners-Lee) | + | |

| − | * Wikidata ID e.g. [https://www.wikidata.org/wiki/Q80 Q80 (Tim Berners-Lee) | + | * '''ORCID''': e.g., [https://orcid.org/0000-0003-1279-3709 0000-0003-1279-3709] (Tim Berners-Lee) |

| − | * dblp author ID e.g. [https://dblp.org/pid/b/TimBernersLee.html b/TimBernersLee (Tim- | + | * '''Wikidata ID''': e.g., [https://www.wikidata.org/wiki/Q80 Q80] (Tim Berners-Lee) |

| + | * '''dblp author ID''': e.g., [https://dblp.org/pid/b/TimBernersLee.html b/TimBernersLee] (Tim Berners-Lee) | ||

| + | |||

You can either enter such an ID directly or use the lookup functionality to do so. | You can either enter such an ID directly or use the lookup functionality to do so. | ||

| + | |||

| + | After identifying yourself, you can then assign the following information to your query: | ||

| + | * a domain | ||

| + | * a namespace | ||

| + | * a name | ||

| + | * a title | ||

| + | * a description | ||

| + | * a URL | ||

| + | * a SPARQL query | ||

| + | * comments | ||

| + | |||

| + | For example, the prominent [http://snapquery.bitplan.com/query/snapquery-examples/cats cats] query would be specified as: | ||

| + | |||

| + | * '''Domain''': wikidata.org | ||

| + | * '''Name''': Cats | ||

| + | * '''Namespace''': snapquery-examples | ||

| + | * '''Title''': Cats on Wikidata | ||

| + | * '''Description''': This query retrieves all items classified under 'house cat' (Q146) on Wikidata | ||

| + | * '''Query''': | ||

| + | <source lang='sparql'> | ||

| + | # snapquery cats example | ||

| + | SELECT ?item ?itemLabel | ||

| + | WHERE { | ||

| + | ?item wdt:P31 wd:Q146. # Must be a cat | ||

| + | OPTIONAL { ?item rdfs:label ?itemLabel. } | ||

| + | FILTER (LANG(?itemLabel) = "en") | ||

| + | } | ||

| + | </source> | ||

| + | === Example === | ||

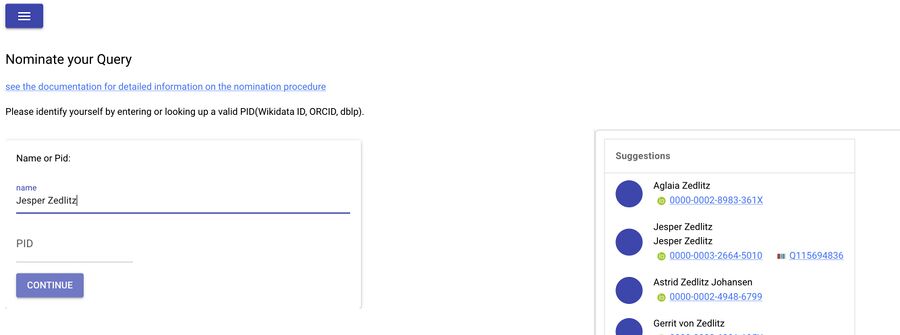

| + | ==== Nominators Persistent Identifier ==== | ||

| + | [https://cr.bitplan.com/index.php/Jesper_Zedlitz Jesper Zedlitz] | ||

| + | [[File:ScreenShotNomination2025-06-20-applicant.jpg|900px]] | ||

| + | |||

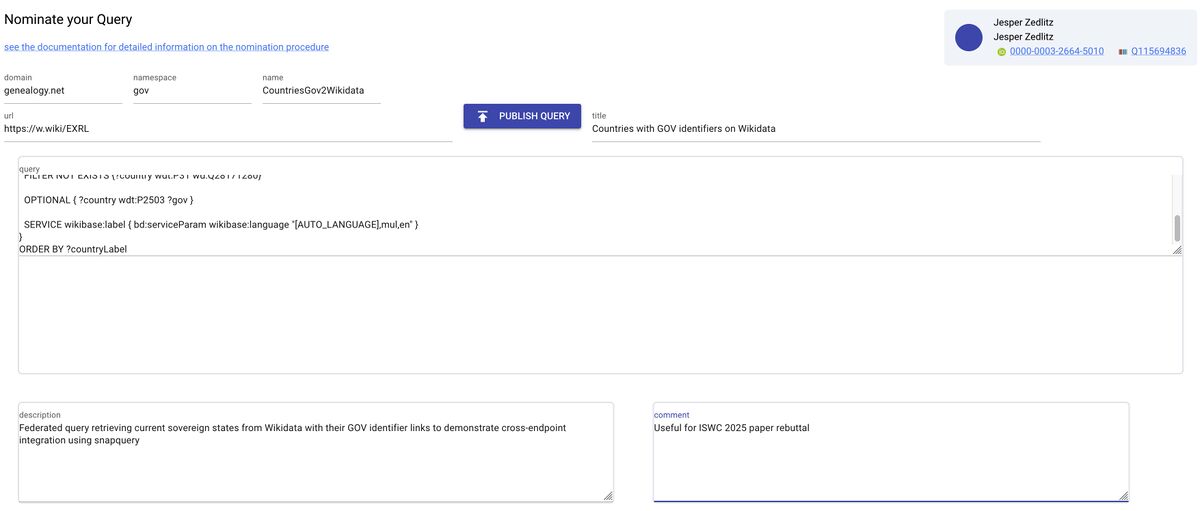

| + | ==== Nominated Query ==== | ||

| + | see https://github.com/WolfgangFahl/snapquery/issues/61 | ||

| + | |||

| + | [[File:ScreenShotNomination2025-06-20-publish.jpg|1200px]] | ||

== RESTFul API == | == RESTFul API == | ||

http://snapquery.bitplan.com/docs | http://snapquery.bitplan.com/docs | ||

| − | === query === | + | |

| + | === cats query === | ||

| + | https://snapquery.bitplan.com/query/wikidata.org/snapquery-examples/cats | ||

| + | |||

<source lang=bash' highlight="1,2"> | <source lang=bash' highlight="1,2"> | ||

curl 'https://snapquery.bitplan.com/api/query/wikidata-examples/cats.mediawiki?limit=5' | curl 'https://snapquery.bitplan.com/api/query/wikidata-examples/cats.mediawiki?limit=5' | ||

| Line 81: | Line 124: | ||

== Commandline == | == Commandline == | ||

| − | === Help === | + | === snapquery === |

| + | ==== Help ==== | ||

<source lang='bash' highlight='1'> | <source lang='bash' highlight='1'> | ||

snapquery -h | snapquery -h | ||

| + | snapquery % snapquery -h | ||

usage: snapquery [-h] [-a] [--apache APACHE] [-c] [-d] | usage: snapquery [-h] [-a] [--apache APACHE] [-c] [-d] | ||

[--debugServer DEBUGSERVER] [--debugPort DEBUGPORT] | [--debugServer DEBUGSERVER] [--debugPort DEBUGPORT] | ||

| Line 89: | Line 134: | ||

[--debugLocalPath DEBUGLOCALPATH] [-l] [-i INPUT] [-rol] | [--debugLocalPath DEBUGLOCALPATH] [-l] [-i INPUT] [-rol] | ||

[--host HOST] [--port PORT] [-s] [-V] [-ep ENDPOINTPATH] | [--host HOST] [--port PORT] [-s] [-V] [-ep ENDPOINTPATH] | ||

| − | [-en | + | [-en {wikidata,wikidata-triply,wikidata-qlever,wikidata-openlinksw,wikidata-scatter,orkg,dblp,dblp-qlever,dblp-trier,wikidata-scholarly-experimental,wikidata-main-experimental}] |

| + | [-idb] [-le] [-lm] [-ln] [-lg] [-tq] [--limit LIMIT] | ||

| + | [--params PARAMS] [--domain DOMAIN] [--namespace NAMESPACE] | ||

| + | [-qn QUERYNAME] | ||

| + | [-f {csv,json,html,xml,tsv,latex,mediawiki,raw,github}] | ||

| + | [--import IMPORT_FILE] [--context CONTEXT] | ||

| + | [--prefix_merger {RAW,SIMPLE_MERGER,ANALYSIS_MERGER}] | ||

| + | [query_id] | ||

Introduce Named Queries and Named Query Middleware to wikidata | Introduce Named Queries and Named Query Middleware to wikidata | ||

| + | |||

| + | positional arguments: | ||

| + | query_id Query ID in the format 'name[--namespace[@domain]]' | ||

options: | options: | ||

| Line 122: | Line 177: | ||

path to yaml file to configure endpoints to use for | path to yaml file to configure endpoints to use for | ||

queries | queries | ||

| − | -en | + | -en {wikidata,wikidata-triply,wikidata-qlever,wikidata-openlinksw,wikidata-scatter,orkg,dblp,dblp-qlever,dblp-trier,wikidata-scholarly-experimental,wikidata-main-experimental}, --endpointName {wikidata,wikidata-triply,wikidata-qlever,wikidata-openlinksw,wikidata-scatter,orkg,dblp,dblp-qlever,dblp-trier,wikidata-scholarly-experimental,wikidata-main-experimental} |

Name of the endpoint to use for queries - use | Name of the endpoint to use for queries - use | ||

--listEndpoints to list available endpoints | --listEndpoints to list available endpoints | ||

| + | -idb, --initDatabase initialize the database | ||

-le, --listEndpoints show the list of available endpoints | -le, --listEndpoints show the list of available endpoints | ||

| + | -lm, --listMetaqueries | ||

| + | show the list of available metaqueries | ||

| + | -ln, --listNamespaces | ||

| + | show the list of available namespaces | ||

| + | -lg, --listGraphs show the list of available graphs | ||

| + | -tq, --testQueries test run the queries | ||

| + | --limit LIMIT set limit parameter of query | ||

| + | --params PARAMS query parameters as Key-value pairs in the format | ||

| + | key1=value1,key2=value2 | ||

| + | --domain DOMAIN domain to filter queries | ||

| + | --namespace NAMESPACE | ||

| + | namespace to filter queries | ||

| + | -qn QUERYNAME, --queryName QUERYNAME | ||

| + | run a named query | ||

| + | -f {csv,json,html,xml,tsv,latex,mediawiki,raw,github}, --format {csv,json,html,xml,tsv,latex,mediawiki,raw,github} | ||

| + | --import IMPORT_FILE Import named queries from a JSON file. | ||

| + | --context CONTEXT context name to store the execution statistics with | ||

| + | --prefix_merger {RAW,SIMPLE_MERGER,ANALYSIS_MERGER} | ||

| + | query prefix merger to use | ||

</source> | </source> | ||

=== List Endpoints === | === List Endpoints === | ||

| Line 236: | Line 311: | ||

</source> | </source> | ||

{{pip|snapquery}} | {{pip|snapquery}} | ||

| + | |||

== Initial database import == | == Initial database import == | ||

<source lang='bash'> | <source lang='bash'> | ||

Latest revision as of 06:35, 4 December 2025

OsProject

| OsProject | |

|---|---|

| id | snapquery |

| state | active |

| owner | WolfgangFahl |

| title | snapquery |

| url | https://github.com/WolfgangFahl/snapquery |

| version | 0.2.3 |

| description | Just Query wikidata and other SPARQL endpoints by qury name - a frontend to Introduce Named Queries and Named Query Middleware to wikidata and other SPARQL endpoints |

| date | 2025-12-04 |

| since | 2024-05-03 |

| until | |

Motivation

Querying Knowledge Graphs such as wikidata that are based on SPARQL is too complex for mere mortals. A simple

snapquery cats

should work. See https://snapquery.bitplan.com/query/wikidata-examples/cats for an example

Demos

Usage

Web

nominate

To nominate a query, you need to first identify yourself with a persistent identifier (PID) such as:

- ORCID: e.g., 0000-0003-1279-3709 (Tim Berners-Lee)

- Wikidata ID: e.g., Q80 (Tim Berners-Lee)

- dblp author ID: e.g., b/TimBernersLee (Tim Berners-Lee)

You can either enter such an ID directly or use the lookup functionality to do so.

After identifying yourself, you can then assign the following information to your query:

- a domain

- a namespace

- a name

- a title

- a description

- a URL

- a SPARQL query

- comments

For example, the prominent cats query would be specified as:

- Domain: wikidata.org

- Name: Cats

- Namespace: snapquery-examples

- Title: Cats on Wikidata

- Description: This query retrieves all items classified under 'house cat' (Q146) on Wikidata

- Query:

# snapquery cats example

SELECT ?item ?itemLabel

WHERE {

?item wdt:P31 wd:Q146. # Must be a cat

OPTIONAL { ?item rdfs:label ?itemLabel. }

FILTER (LANG(?itemLabel) = "en")

}

Example

Nominators Persistent Identifier

Nominated Query

see https://github.com/WolfgangFahl/snapquery/issues/61

RESTFul API

http://snapquery.bitplan.com/docs

cats query

https://snapquery.bitplan.com/query/wikidata.org/snapquery-examples/cats

curl 'https://snapquery.bitplan.com/api/query/wikidata-examples/cats.mediawiki?limit=5'cats

query

SELECT ?item ?itemLabel

WHERE {

?item wdt:P31 wd:Q146. # Must be a cat

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

LIMIT 5

result

| item | itemLabel |

|---|---|

| http://www.wikidata.org/entity/Q378619 | CC |

| http://www.wikidata.org/entity/Q498787 | Muezza |

| http://www.wikidata.org/entity/Q677525 | Orangey |

| http://www.wikidata.org/entity/Q851190 | Mrs. Chippy |

| http://www.wikidata.org/entity/Q893453 | Unsinkable Sam |

sparql

curl 'https://snapquery.bitplan.com/api/sparql/wikidata-examples/cats'

SELECT ?item ?itemLabel

WHERE {

?item wdt:P31 wd:Q146. # Must be a cat

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

Commandline

snapquery

Help

snapquery -h

snapquery % snapquery -h

usage: snapquery [-h] [-a] [--apache APACHE] [-c] [-d]

[--debugServer DEBUGSERVER] [--debugPort DEBUGPORT]

[--debugRemotePath DEBUGREMOTEPATH]

[--debugLocalPath DEBUGLOCALPATH] [-l] [-i INPUT] [-rol]

[--host HOST] [--port PORT] [-s] [-V] [-ep ENDPOINTPATH]

[-en {wikidata,wikidata-triply,wikidata-qlever,wikidata-openlinksw,wikidata-scatter,orkg,dblp,dblp-qlever,dblp-trier,wikidata-scholarly-experimental,wikidata-main-experimental}]

[-idb] [-le] [-lm] [-ln] [-lg] [-tq] [--limit LIMIT]

[--params PARAMS] [--domain DOMAIN] [--namespace NAMESPACE]

[-qn QUERYNAME]

[-f {csv,json,html,xml,tsv,latex,mediawiki,raw,github}]

[--import IMPORT_FILE] [--context CONTEXT]

[--prefix_merger {RAW,SIMPLE_MERGER,ANALYSIS_MERGER}]

[query_id]

Introduce Named Queries and Named Query Middleware to wikidata

positional arguments:

query_id Query ID in the format 'name[--namespace[@domain]]'

options:

-h, --help show this help message and exit

-a, --about show about info [default: False]

--apache APACHE create an apache configuration file for the given

domain

-c, --client start client [default: False]

-d, --debug show debug info [default: False]

--debugServer DEBUGSERVER

remote debug Server

--debugPort DEBUGPORT

remote debug Port

--debugRemotePath DEBUGREMOTEPATH

remote debug Server path mapping - remotePath - path

on debug server

--debugLocalPath DEBUGLOCALPATH

remote debug Server path mapping - localPath - path on

machine where python runs

-l, --local run with local file system access [default: False]

-i INPUT, --input INPUT

input file

-rol, --render_on_load

render on load [default: False]

--host HOST the host to serve / listen from [default: localhost]

--port PORT the port to serve from [default: 9862]

-s, --serve start webserver [default: False]

-V, --version show program's version number and exit

-ep ENDPOINTPATH, --endpointPath ENDPOINTPATH

path to yaml file to configure endpoints to use for

queries

-en {wikidata,wikidata-triply,wikidata-qlever,wikidata-openlinksw,wikidata-scatter,orkg,dblp,dblp-qlever,dblp-trier,wikidata-scholarly-experimental,wikidata-main-experimental}, --endpointName {wikidata,wikidata-triply,wikidata-qlever,wikidata-openlinksw,wikidata-scatter,orkg,dblp,dblp-qlever,dblp-trier,wikidata-scholarly-experimental,wikidata-main-experimental}

Name of the endpoint to use for queries - use

--listEndpoints to list available endpoints

-idb, --initDatabase initialize the database

-le, --listEndpoints show the list of available endpoints

-lm, --listMetaqueries

show the list of available metaqueries

-ln, --listNamespaces

show the list of available namespaces

-lg, --listGraphs show the list of available graphs

-tq, --testQueries test run the queries

--limit LIMIT set limit parameter of query

--params PARAMS query parameters as Key-value pairs in the format

key1=value1,key2=value2

--domain DOMAIN domain to filter queries

--namespace NAMESPACE

namespace to filter queries

-qn QUERYNAME, --queryName QUERYNAME

run a named query

-f {csv,json,html,xml,tsv,latex,mediawiki,raw,github}, --format {csv,json,html,xml,tsv,latex,mediawiki,raw,github}

--import IMPORT_FILE Import named queries from a JSON file.

--context CONTEXT context name to store the execution statistics with

--prefix_merger {RAW,SIMPLE_MERGER,ANALYSIS_MERGER}

query prefix merger to use

List Endpoints

snapquery --listEndpoints

wikidata:https://query.wikidata.org:https://query.wikidata.org/sparql(POST)

qlever-wikidata:https://qlever.cs.uni-freiburg.de/wikidata:https://qlever.cs.uni-freiburg.de/api/wikidata(POST)

Run Query

cats

snapquery -qn cats --limit 10 -f mediawiki

query

SELECT ?item ?itemLabel

WHERE {

?item wdt:P31 wd:Q146. # Must be a cat

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

result

| item | itemLabel |

|---|---|

| http://www.wikidata.org/entity/Q378619 | CC |

| http://www.wikidata.org/entity/Q498787 | Muezza |

| http://www.wikidata.org/entity/Q677525 | Orangey |

| http://www.wikidata.org/entity/Q851190 | Mrs. Chippy |

| http://www.wikidata.org/entity/Q893453 | Unsinkable Sam |

| http://www.wikidata.org/entity/Q1050083 | Catmando |

| http://www.wikidata.org/entity/Q1185550 | Oscar |

| http://www.wikidata.org/entity/Q1201902 | Tama |

| http://www.wikidata.org/entity/Q1207136 | Dewey Readmore Books |

| http://www.wikidata.org/entity/Q1371145 | Socks |

scholia author_list-of-publications with parameter Q80 (papers by Tim-Berners-Lee)

snapquery --namespace scholia -qn author_list-of-publications --params q=Q80 --limit 2 -f mediawiki

query

#defaultView:Table

PREFIX target: <http://www.wikidata.org/entity/Q80>

SELECT

(MIN(?dates) AS ?date)

?work ?workLabel

(GROUP_CONCAT(DISTINCT ?type_label; separator=", ") AS ?type)

(SAMPLE(?pages_) AS ?pages)

?venue ?venueLabel

(GROUP_CONCAT(DISTINCT ?author_label; separator=", ") AS ?authors)

(CONCAT("../authors/", GROUP_CONCAT(DISTINCT SUBSTR(STR(?author), 32); separator=",")) AS ?authorsUrl)

WHERE {

?work wdt:P50 target: .

?work wdt:P50 ?author .

OPTIONAL {

?author rdfs:label ?author_label_ . FILTER (LANG(?author_label_) = 'en')

}

BIND(COALESCE(?author_label_, SUBSTR(STR(?author), 32)) AS ?author_label)

OPTIONAL { ?work wdt:P31 ?type_ . ?type_ rdfs:label ?type_label . FILTER (LANG(?type_label) = 'en') }

?work wdt:P577 ?datetimes .

BIND(xsd:date(?datetimes) AS ?dates)

OPTIONAL { ?work wdt:P1104 ?pages_ }

OPTIONAL { ?work wdt:P1433 ?venue }

SERVICE wikibase:label { bd:serviceParam wikibase:language "en,da,de,es,fr,jp,no,ru,sv,zh". }

}

GROUP BY ?work ?workLabel ?venue ?venueLabel

ORDER BY DESC(?date)

LIMIT 2

result

| date | work | workLabel | type | venue | venueLabel | authors | authorsUrl | pages |

|---|---|---|---|---|---|---|---|---|

| 2023-07-07 | http://www.wikidata.org/entity/Q125750037 | Linked Data - The Story So Far | chapter | http://www.wikidata.org/entity/Q125750044 | Linking the World’s Information | Christian Bizer, Tom Heath, Tim Berners-Lee | ../authors/Q17744291,Q17744272,Q80 | |

| 2017-01-01 | http://www.wikidata.org/entity/Q30096408 | Linked Data Notifications: A Resource-Centric Communication Protocol | scholarly article | http://www.wikidata.org/entity/Q30092087 | The Semantic Web: 14th International Conference, ESWC 2017, Portorož, Slovenia, May 28 – June 1, 2017, Proceedings, Part I | Christoph Lange, Amy Guy, Sarven Capadisli, Sören Auer, Tim Berners-Lee | ../authors/Q30276490,Q30086138,Q30078591,Q27453085,Q80 | 17 |

Mass Import Queries

snapquery --import samples/scholia.json

Importing Named Queries: 100%|████████████| 372/372 [00:00<00:00, 226127.69it/s]

Imported 372 named queries from samples/scholia.json.

Installation

pip install snapquery

# alternatively if your pip is not a python3 pip

pip3 install snapquery

# local install from source directory of snapquery

pip install .

upgrade

pip install snapquery -U

# alternatively if your pip is not a python3 pip

pip3 install snapquery -U

Initial database import

scripts/restore_db

Importing Named Queries: 100%|██████████████████| 28/28 [00:25<00:00, 1.09it/s]

Imported 28 named queries from samples/ceur-ws.json.

Importing Named Queries: 100%|████████████| 372/372 [00:00<00:00, 210308.81it/s]

Imported 372 named queries from samples/scholia.json.

Importing Named Queries: 100%|████████████| 298/298 [00:00<00:00, 252149.00it/s]

Imported 298 named queries from samples/wikidata-examples.json.